Axioms are self-evident truths. The axioms of Software Development isolate key basics underpinning successful software development. These axioms build on each other. Understanding the first one will aid your understanding of the subsequent ones. This is part of the series on the Axioms of Software Development. If you haven’t read the previous axioms - you can see them here.

It’s my aim that by helping you understand these axioms, I can help you resolve problems associated with coordinating developers, time and resources and ensuring your software project is successful.

9. Test Adequately

What is Testing?

Arguably, the ‘best’ way to test a modern web application would be to have a person sit down in front of a computer with a list of features that the web application should be able to do, and to click around the application and try to complete the tasks required while using each of those features, while inspecting the result to see that it was done correctly.

However, as the number of features rise, this rapidly becomes impractical. For a tiny application, someone might be able to test all the features in a day or so of work, but any large application would take months (or years!) to click or type through every feature.

This would also be extremely tedious, repetitive, boring, high precision work as the vast majority of the time everything would just work correctly, and humans are really not very good at tedious, repetitive, boring, high precision work.

Computers, however, are really good at tedious, repetitive, boring, high precision work. A modern computer will calculate 2+2 and make sure it equals 4 probably a billion times a second if you asked it to. It would do this without complaint. If 2+2 didn’t equal 4 for some reason one time out of that billion, it would tell you that it happened without fail.

So, in order to test a web application, we build a second piece of software (called a ‘test’ or a ‘specification’) which confirms the feature they just wrote works as intended. This is quite often achieved by simulating a user clicking and typing around on the website, inspecting the results shown on the screen and checking what is saved in the database.

As you can imagine, with thousands of features, you would need to (and do) write thousands of tests. But for every single feature, there may be one or fifty ways to use that feature. Should we write tests for just one method? Ten of the methods? Or all fifty?

Obviously writing more tests results in more stable code. But there are other drawbacks, not the least of which is the expense of paying someone to write them!

So this then raises our next question

What is the Goal of Testing?

Software will have bugs as it’s being created. This is a known fact. The amount of money spent to make bug free software for critical systems like aircraft or spacecraft or medical equipment, is not a viable option for your average web application.

To make a web application to be near 100% bug free at all times would inflate the price perhaps thousands of times over. At that point, you wouldn’t be able to afford to build it.

This is especially true when you realise that what one person might be calling a software bug can sometimes be described by another as an unimplemented feature or even an actual bona fide feature!

You can complicate this further by considering all the bugs that could possibly arise due to the end user operating system being out of date, or browser errors, or their internet connection being flakey, or if a third party API your application depends on fails, or even bugs arising from your software being so popular that it runs into scaling issues.

So ‘bug free’ can not be the goal. At least not in the real world.

Therefore, if ‘bug free’ is not the goal, what should the goal be?

This is hard to quantify. I have always promoted the idea that we should strive to make sure the common paths travelled by the end user through the application are as bug free as practically possible.

If there is a bug that arises sporadically in 1 in about 100 requests, this might be able to be lived with if that feature is only used once per month by each user. Most users would then never even encounter the bug.

So this gives us the idea of “testing adequately”. You want to test for what we call “the happy path”. If everything is going right, this is what the software system should do. You don’t want to test every individual line item of the code, that would be ridiculous, and no one has the money to do that.

Adequate tests allow developers to look forward, not back when they build out new features for your application. Any incompatibility between old and new features is immediately visible and the exact incompatibility can be rapidly pinpointed by either fixing the test to include the new functionality, or fixing the new functionality to make the existing test pass.

What to Test?

Modern software developers do not write every line of code in the software they develop. They use frameworks like Ruby on Rails with tens or hundreds of software libraries (gems) they call on to perform specific functions. This speeds development. It means a developer only has to write the code to achieve your individual needs. Pre-existing libraries can handle routine functions like user authentication, reading and writing from databases, correctly forming emails or integrating with payment providers.

So this gives you the first clue on what to test, and that is “test the code you write”. If a developer includes a whole software library that correctly formats an email, then they should not be writing tests to make sure the email was formatted correctly. What they should test is the contents of the email, that it is being sent to the correct person, that the subject is correct and so on.

OK, now you know that you only write tests for the code you write, we are faced with the problem of WHICH code deserves a test?

Testing every piece and possible combination of the code would be impractical if not impossible. We need to select the best code to test.

I recommend breaking this down into two broad categories.

First, test any complex algorithms or calculations with valid and invalid inputs checking their outputs. If you wrote a complex set of code around calculating the correct taxes and tariffs, then multiple combinations of this (including negative values) would be very smart to test thoroughly. These tests are cheap to write and run both from a computational and developer time expenditure and so you should be thorough.

For the more complex features, like API integrations to external providers, or the usability of the site (clicking around and doing things) it is best to first test what we call the “Happy Path.” This is the path that most users are going to take if nothing strange is going on. With the Happy Path and complex calculations tested, you give your developers (and any other developer who might encounter the code-base in the future) great confidence they are not creating new problems when adding new features.

When Website Requirements Evolve

Let’s say we write a piece of software that dictates: before a user can sign up to this service, we have to check that they live within a specific area.

And later, you implement a change in the system that allows people from another area to sign up. So, with the revised condition, your developer implements the change allowing people from a second area to sign up. In the intervening months/years since the initial requirement, you have taken on a new developer who wasn’t around when the original code was written. He might not be aware that there was a requirement that sign-ups be only allowed in certain areas.

The new developer thinks that this isn’t a problem and goes ahead and tries to implement the new feature. Once the new requirements are implemented, if there was a test written around the original requirement, the system will return an error saying: “warning, this new requirement violates the previous requirement”.

At that point the developer can go “Oh, I know why that is” and can go and fix it, or they can come back to you as a stakeholder and say, “hang on a sec, there is a requirement here about only allowing users from this location to sign up. Is the requirement no longer valid? Or is that earlier requirement only valid if another condition is met?”

You, as the software stakeholder, will be able to clarify: “The location requirement is only if they want to purchase these products. Add an additional check on the location requirement to check what product they are buying.”

The developer then easily adds an additional check and the matter is resolved. If you didn’t have tests, your developer likely wouldn’t know there was an incompatibility with the function he implemented. The new change would go into production and no one would know that the location requirement has been broken. You would then end up (in this fictitious example) with products going to the wrong customers.

As your Website Grows, Complexity Grows

The above example covers one requirement. But when you view modern software applications you quickly discover there are tens if not hundreds of requirements that the software must do to reach the intended result. For example, when a user clicks a critical button, it should achieve a specified result. Or, if a user loads a page, tests could confirm the correct content is loaded. Or, when a user logs into their account, they see their account information and they can achieve the purpose of their visit.

With the complexity of modern software, a comprehensive set of tests will ensure that any change made alerts users to any unintended effects on other parts of the code base.

When Things Go Wrong

The other scenario that comes up often is a user ends up doing a certain sequence of things that results in the system crashing because they have managed to get the system into an unknown, untested and undeveloped state.

In this situation, the developer would reproduce this error, write a test around that error and then fix the code to do the right thing in that specific state.

This way, as the system grows, you get a more complete set of tests that makes sure everything is working as intended.

Adequate Testing Delivers Speed

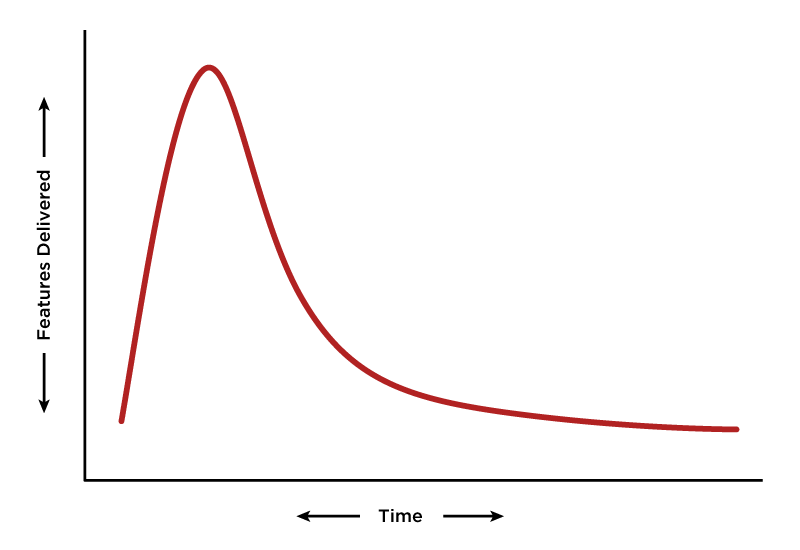

With the above in place you end up developing at a more consistent pace. Development teams that do not test, generally have a large spike of features delivered early, but the features delivered over time drop off rapidly as each new feature ends up breaking something else without them knowing and they are constantly fighting fires of broken production systems due to unintended changes.

Teams that test adequately are initially slower off the mark, but their delivery over time stays more constant providing with a greater number of features delivered over the life of the project.

Are These Axioms Helping You?

I hope you are getting value from these axioms. Please send me through your feedback and thoughts.